The zebra puzzle is a well-known logic puzzle. It is often called Einstein's Puzzle or Einstein's Riddle because it is said to have been invented by Albert Einstein as a boy. [1] The puzzle is also sometimes attributed to Lewis Carroll.[2] However, there is no known evidence for Einstein's or Carroll's authorship; and the original puzzle cited below mentions brands of cigarette, such as Kools, that did not exist during Carroll's lifetime or Einstein's boyhood.

There are several versions of this puzzle. The version below is quoted from the first known publication in Life International magazine on December 17, 1962. The March 25, 1963 issue contained the solution given below and the names of several hundred solvers from around the world.

Since neither water nor a zebra is mentioned in the clues, there exists a reductive solution to the puzzle, namely that no one owns a zebra or drinks water. If, however, the questions are read as "Given that one resident drinks water, which is it?" and "Given that one resident owns a zebra, which is it?" then the puzzle becomes a non-trivial challenge to inferential logic. (A frequent variant of the puzzle asks "Who owns the fish?")

It is possible not only to deduce the answers to the two questions but to figure out who lives where, in what color house, keeping what pet, drinking what drink, and smoking what brand of cigarettes.

Rule 12 leads to a contradiction. It should have read "Kools are smoked in a house next to the house where the horse is kept", as opposed the the house, since the implies that there is only one house next to the house with the horse, which implies that the house with the horse is either the leftmost or the rightmost house. The text above has been kept as it is, as it is meant to be a presentation of the text of the puzzle as originally published.

Here are some deductive steps that can be followed to derive the solution. A useful method is to try to fit known relationships into a table and eliminate possibilities. Key deductions are in italics.

From (10) and (15), the 2nd house is blue. What color is the 1st house? Not green or ivory, because they have to be next to each other (6 and the 2nd house is blue). Not red, because the Englishman lives there (2). Therefore the 1st house is yellow.

It follows that Kools are smoked in the 1st house (8) and the Horse is kept in the 2nd house (12).

So what is drunk by the Norwegian in the 1st, yellow, Kools-filled house? Not tea since the Ukrainian drinks that (5). Not coffee since that is drunk in the green house (4). Not milk since that is drunk in the 3rd house (9). Not orange juice since the drinker of orange juice smokes Lucky Strikes (13). Therefore it is water (the missing beverage) that is drunk by the Norwegian.

Not Kools which are smoked in the 1st house (8). Not Old Gold since that house must have snails (7).

Let's suppose Lucky Strikes are smoked here, which means orange juice is drunk here (13). Then consider: Who lives here? Not the Norwegian since he lives in the 1st House (10). Not the Englishman since he lives in a red house (2). Not the Spaniard since he owns a dog (3). Not the Ukrainian since he drinks tea (4). Not the Japanese who smokes Parliaments (14). Since this is an impossible situation, Lucky Strikes are not smoked in the 2nd house.

Let's suppose Parliaments are smoked here, which means the Japanese man lives here (14). Then consider: What is drunk here? Not tea since the Ukrainian drinks that (5). Not coffee since that is drunk in the green house (4). Not milk since that is drunk in the 3rd house (9). Not orange juice since the drinker of that smokes Lucky Strike (13). Again, since this is an impossible situation, Parliaments are not smoked in the 2nd house.

Therefore, Chesterfields are smoked in the 2nd house.

So who smokes Chesterfields and keeps a Horse in the 2nd, blue house? Not the Norwegian who lives in the 1st House (10). Not the Englishman who lives in a red house (2). Not the Spaniard who owns a dog (3). Not the Japanese who smokes Parliaments (14). Therefore, the Ukrainian lives in the 2nd House, where he drinks tea (5)!

Let us first assume that the fox is kept in the 3rd house. Then consider: what is drunk by the man who smokes Old Golds and keeps snails (7)? We have already ruled out water and tea from the above steps. It cannot be orange juice since the drinker of that smokes Lucky Strikes (13). It cannot be milk because that is drunk in the 3rd house (9), where we have assumed a fox is kept. This leaves coffee, which we know is drunk in the green house (4).

So if the fox is kept in the 3rd house, then someone smokes Old Golds, keeps snails and drinks coffee in a green house. Who can this person be? Not the Norwegian who lives in the 1st house (10). Not the Ukrainian who drinks tea (5). Not the Englishman who lives in a red house (2). Not the Japanese who smokes Parliaments (14). Not the Spaniard who owns a dog (3).

This is impossible. So it follows that the fox is not kept in the 3rd house, but in the 1st house.

So where does the man who smokes Old Gold and keeps snails live? Not the orange juice house since Lucky Strike is smoked there (13).

Suppose this man lives in the coffee house. Then we have someone who smokes Old Gold, keeps snails and drinks coffee in a green (4) house. Again, by the same reasoning in step 3, this is impossible.

Therefore, the Old Gold-smoking, Snail-keeping man lives in the 3rd house.

It follows that Parliaments are smoked in the green, coffee-drinking house, by the Japanese man (14). This means the Spaniard must be the one who drinks orange juice, smokes Lucky Strikes and keeps a dog. By extension, the Englishman must live in the 3rd house, which is red. By process of elimination, the Spaniard's house is the ivory one.

By now we have filled in every variable except one, and it is clear that the Japanese is the one who keeps the zebra.

There are several versions of this puzzle. The version below is quoted from the first known publication in Life International magazine on December 17, 1962. The March 25, 1963 issue contained the solution given below and the names of several hundred solvers from around the world.

Contents[hide] |

[edit] Text of the original puzzle

The premises leave out some details, notably that the houses are in a row.Now, who drinks water? Who owns the zebra? In the interest of clarity, it must be added that each of the five houses is painted a different color, and their inhabitants are of different national extractions, own different pets, drink different beverages and smoke different brands of American cigarets [sic]. One other thing: in statement 6, right means your right.

- There are five houses.

- The Englishman lives in the red house.

- The Spaniard owns the dog.

- Coffee is drunk in the green house.

- The Ukrainian drinks tea.

- The green house is immediately to the right of the ivory house.

- The Old Gold smoker owns snails.

- Kools are smoked in the yellow house.

- Milk is drunk in the middle house.

- The Norwegian lives in the first house.

- The man who smokes Chesterfields lives in the house next to the man with the fox.

- Kools are smoked in the house next to the house where the horse is kept. (should be "... a house ...", see Discussion section)

- The Lucky Strike smoker drinks orange juice.

- The Japanese smokes Parliaments.

- The Norwegian lives next to the blue house.

– Life International, December 17, 1962

Since neither water nor a zebra is mentioned in the clues, there exists a reductive solution to the puzzle, namely that no one owns a zebra or drinks water. If, however, the questions are read as "Given that one resident drinks water, which is it?" and "Given that one resident owns a zebra, which is it?" then the puzzle becomes a non-trivial challenge to inferential logic. (A frequent variant of the puzzle asks "Who owns the fish?")

It is possible not only to deduce the answers to the two questions but to figure out who lives where, in what color house, keeping what pet, drinking what drink, and smoking what brand of cigarettes.

Rule 12 leads to a contradiction. It should have read "Kools are smoked in a house next to the house where the horse is kept", as opposed the the house, since the implies that there is only one house next to the house with the horse, which implies that the house with the horse is either the leftmost or the rightmost house. The text above has been kept as it is, as it is meant to be a presentation of the text of the puzzle as originally published.

[edit] Solution

| House | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Color | Yellow | Blue | Red | Ivory | Green |

| Nationality | Norwegian | Ukrainian | Englishman | Spaniard | Japanese |

| Drink | Water | Tea | Milk | Orange juice | Coffee |

| Smoke | Kools | Chesterfield | Old Gold | Lucky Strike | Parliament |

| Pet | Fox | Horse | Snails | Dog | Zebra |

[edit] Step 1

We are told the Norwegian lives in the 1st house (10). It does not matter whether this is counted from the left or from the right. We just need to know the order, not the direction.From (10) and (15), the 2nd house is blue. What color is the 1st house? Not green or ivory, because they have to be next to each other (6 and the 2nd house is blue). Not red, because the Englishman lives there (2). Therefore the 1st house is yellow.

It follows that Kools are smoked in the 1st house (8) and the Horse is kept in the 2nd house (12).

So what is drunk by the Norwegian in the 1st, yellow, Kools-filled house? Not tea since the Ukrainian drinks that (5). Not coffee since that is drunk in the green house (4). Not milk since that is drunk in the 3rd house (9). Not orange juice since the drinker of orange juice smokes Lucky Strikes (13). Therefore it is water (the missing beverage) that is drunk by the Norwegian.

[edit] Step 2

So what is smoked in the 2nd, blue house where we know a Horse is also kept?Not Kools which are smoked in the 1st house (8). Not Old Gold since that house must have snails (7).

Let's suppose Lucky Strikes are smoked here, which means orange juice is drunk here (13). Then consider: Who lives here? Not the Norwegian since he lives in the 1st House (10). Not the Englishman since he lives in a red house (2). Not the Spaniard since he owns a dog (3). Not the Ukrainian since he drinks tea (4). Not the Japanese who smokes Parliaments (14). Since this is an impossible situation, Lucky Strikes are not smoked in the 2nd house.

Let's suppose Parliaments are smoked here, which means the Japanese man lives here (14). Then consider: What is drunk here? Not tea since the Ukrainian drinks that (5). Not coffee since that is drunk in the green house (4). Not milk since that is drunk in the 3rd house (9). Not orange juice since the drinker of that smokes Lucky Strike (13). Again, since this is an impossible situation, Parliaments are not smoked in the 2nd house.

Therefore, Chesterfields are smoked in the 2nd house.

So who smokes Chesterfields and keeps a Horse in the 2nd, blue house? Not the Norwegian who lives in the 1st House (10). Not the Englishman who lives in a red house (2). Not the Spaniard who owns a dog (3). Not the Japanese who smokes Parliaments (14). Therefore, the Ukrainian lives in the 2nd House, where he drinks tea (5)!

[edit] Step 3

Since Chesterfields are smoked in the 2nd house, we know from (11) that the fox is kept in either the 1st house or the 3rd house.Let us first assume that the fox is kept in the 3rd house. Then consider: what is drunk by the man who smokes Old Golds and keeps snails (7)? We have already ruled out water and tea from the above steps. It cannot be orange juice since the drinker of that smokes Lucky Strikes (13). It cannot be milk because that is drunk in the 3rd house (9), where we have assumed a fox is kept. This leaves coffee, which we know is drunk in the green house (4).

So if the fox is kept in the 3rd house, then someone smokes Old Golds, keeps snails and drinks coffee in a green house. Who can this person be? Not the Norwegian who lives in the 1st house (10). Not the Ukrainian who drinks tea (5). Not the Englishman who lives in a red house (2). Not the Japanese who smokes Parliaments (14). Not the Spaniard who owns a dog (3).

This is impossible. So it follows that the fox is not kept in the 3rd house, but in the 1st house.

[edit] Step 4

From what we have found so far, we know that coffee and orange juice are drunk in the 4th and 5th houses. It doesn't matter which is drunk in which; we will just call them the coffee house and the orange juice house.So where does the man who smokes Old Gold and keeps snails live? Not the orange juice house since Lucky Strike is smoked there (13).

Suppose this man lives in the coffee house. Then we have someone who smokes Old Gold, keeps snails and drinks coffee in a green (4) house. Again, by the same reasoning in step 3, this is impossible.

Therefore, the Old Gold-smoking, Snail-keeping man lives in the 3rd house.

It follows that Parliaments are smoked in the green, coffee-drinking house, by the Japanese man (14). This means the Spaniard must be the one who drinks orange juice, smokes Lucky Strikes and keeps a dog. By extension, the Englishman must live in the 3rd house, which is red. By process of elimination, the Spaniard's house is the ivory one.

By now we have filled in every variable except one, and it is clear that the Japanese is the one who keeps the zebra.

[edit] Right-to-left solution

The above solution assumed that the first house is the leftmost house. If we assume that the first house is the rightmost house, we find the following solution. Again the Japanese keeps the zebra, and the Norwegian drinks water.| House | 5 | 4 | 3 | 2 | 1 |

|---|---|---|---|---|---|

| Color | Ivory | Green | Red | Blue | Yellow |

| Nationality | Spaniard | Japanese | Englishman | Ukrainian | Norwegian |

| Drink | Orange juice | Coffee | Milk | Tea | Water |

| Smoke | Lucky Strike | Parliament | Old Gold | Chesterfield | Kools |

| Pet | Dog | Zebra | Snails | Horse | Fox |

[edit] Other versions

Other versions of the puzzle have one or more of the following differences to the original puzzle:- Some colors, nationalities, cigarette brands and pets are substituted for other ones and the clues are given in different order. These do not change the logic of the puzzle.

- One rule says that the green house is on the left of the white house, instead of on the right of it. This change has the same effect as numbering the houses from right to left instead of left to right (see section right-to-left solution above). It results only in swapping of the two corresponding houses with all their properties, but makes the puzzle a bit easier. It is also important to note that the omission of the word immediately, as in immediately to the left/right of the white house, leads to multiple solutions to the puzzle.

- The clue "The man who smokes Blend has a neighbor who drinks water" is redundant and therefore makes the puzzle even easier. Originally, this clue was only in form of the question "Who drinks water?"

- The British person lives in the red house.

- The Swede keeps dogs as pets.

- The Dane drinks tea.

- The green house is on the left of the white house.

- The green homeowner drinks coffee.

- The man who smokes Pall Mall keeps birds.

- The owner of the yellow house smokes Dunhill.

- The man living in the center house drinks milk.

- The Norwegian lives in the first house.

- The man who smokes Blend lives next to the one who keeps cats.

- The man who keeps the horse lives next to the man who smokes Dunhill.

- The man who smokes Bluemaster drinks beer.

- The German smokes Prince.

- The Norwegian lives next to the blue house.

- The man who smokes Blend has a neighbor who drinks water.

- The Englishman lives in the red house.

- The Spaniard owns the dog.

- Coffee is drunk in the green house.

- The Ukrainian drinks tea.

- The green house is immediately to the right of the ivory house.

- The Ford driver owns the snail.

- A Toyota driver lives in the yellow house.

- Milk is drunk in the middle house.

- The Norwegian lives in the first house to the left.

- The man who drives the Chevy lives in the house next to the man with the fox.

- A Toyota is parked next to the house where the horse is kept.

- The Dodge owner drinks orange juice.

- The Japanese owns a Porsche.

- The Norwegian lives next to the blue house.

[edit] References

- ^ Stangroom, Jeremy (2009). Einstein's Riddle: Riddles, Paradoxes, and Conundrums to Stretch Your Mind. Bloomsbury USA. pp. 10–11. ISBN 978-1-59691-665-4.

- ^ James Little, Cormac Gebruers, Derek Bridge, & Eugene Freuder. "Capturing Constraint Programming Experience: A Case-Based Approach" (PDF). Cork Constraint Computation Centre, University College, Cork, Ireland. http://www.cs.ucc.ie/~dgb/papers/Little-Et-Al-2002.pdf. Retrieved 2009-09-05.

[edit] External links

- Generator of logic puzzles similar to the Zebra Puzzle

- Formulation and solution in ALCOIF Description Logic

- Oz/Mozart Solution

- Common Lisp and C/C++ solutions

- English solution (PDF) - structured as a group activity for teaching English as a foreign language

- http://www.sfr-fresh.com/unix/misc/glpk-4.45.tar.gz:a/glpk-4.45/examples/zebra.mod A GNU MathProg model for solving the problem, included with the GNU Linear Programming Kit

- Solution to Einstein's puzzle using the method of elimination

- Formulation and solution in python using python-constraint

![[X,P] = X P - P X = i \hbar](http://upload.wikimedia.org/math/0/3/4/0342ffe593429f253ff09a217ad29be5.png) (see

(see

.

.

, the following formula holds:

, the following formula holds:

changes appreciably.

changes appreciably.

, so that:

, so that:![[p,x] = p x - x p = -i\hbar \left( {d\over dx} x - x {d\over dx} \right) = - i \hbar.](http://upload.wikimedia.org/math/e/2/0/e209eb230eb1a01899da35b25da38e24.png)

and

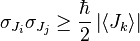

and  . On the other hand, the expectation value of the product AB is always greater than the magnitude of its imaginary part and this last statement can be written as

. On the other hand, the expectation value of the product AB is always greater than the magnitude of its imaginary part and this last statement can be written as

![\langle A^2 \rangle_\psi \langle B^2 \rangle_\psi \ge {1\over 4} |\langle [A,B]\rangle_\psi|^2](http://upload.wikimedia.org/math/a/4/f/a4f98ccb2cba18aad265e837cbdfa574.png)

![\sigma_A\sigma_B \ge \frac{1}{2} \left|\left\langle\left[{A},{B}\right]\right\rangle\right|](http://upload.wikimedia.org/math/f/8/3/f8393ca8b47169c71f08ca74d35cce41.png)

for

for  for

for ![[A - \lang A\rang, B - \lang B\rang] = [ A , B ].](http://upload.wikimedia.org/math/c/1/2/c12f985e7adaf649217b038c4c613295.png)

.

.![\sigma_A \sigma_B \geq \sqrt{ \frac{1}{4}\left|\left\langle\left[{A},{B}\right]\right\rangle\right|^2

+{1\over 4} \left|\left\langle\left\{ A-\langle A\rangle,B-\langle B\rangle \right\} \right\rangle \right|^2}](http://upload.wikimedia.org/math/f/a/2/fa233527d3f84d7356824a9a9a448a8a.png)

![[x,p_x]=i\hbar](http://upload.wikimedia.org/math/a/5/4/a54264a655e2e824a033aa70f53e51d5.png) :

:

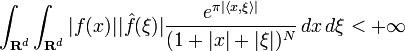

is nonzero cannot both be small. Specifically, it is impossible for a function ƒ in L2(R) and its Fourier transform to both be

is nonzero cannot both be small. Specifically, it is impossible for a function ƒ in L2(R) and its Fourier transform to both be

which is only known if either

which is only known if either

(

( such that

such that

is such that

is such that

and

and  positive definite matrix.

positive definite matrix. is such that

is such that